I recently spoke with a very experienced, very frustrated community organizer. She had just come back from a meeting with a funder who repeatedly admonished her to provide measurable evidence that her project is making a difference.

“For me, data is a four-letter word,” she stated, only half-joking. “Some things we do aren’t easy to measure or prove, but I know they work, and I’m not going to stop doing them just because I can’t measure them!”

I can relate. Though a lifelong community builder, I have often avoided the data question. “We don’t need more data, we need more money to do what we know works,” I would tell myself. More recently, I’ve come to understand that building complex initiatives without using data is akin to Yogi Berra’s quip: “We don’t know where we’re going, but we’re making good time.”

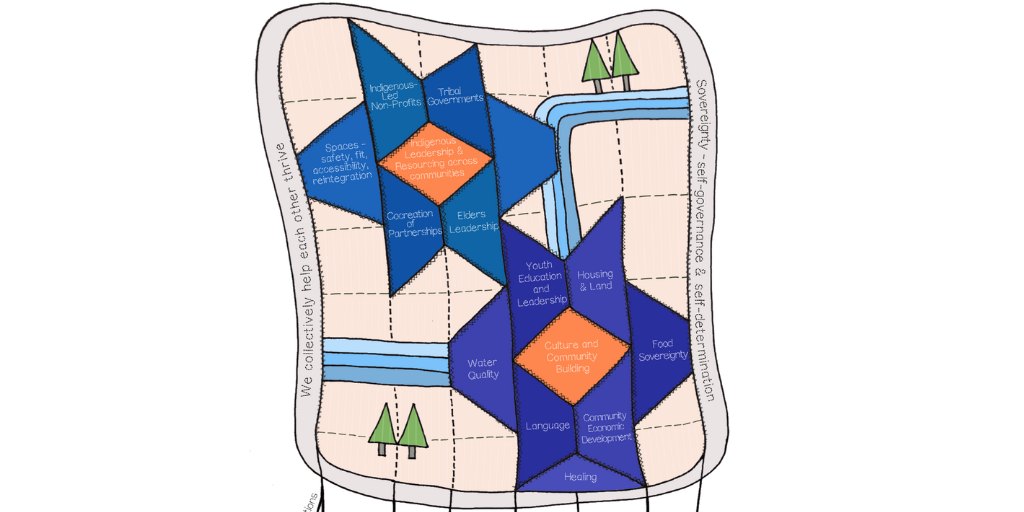

Before launching a new initiative, I often advise groups to ask three questions: Where are we now? Where do we want to be? How will we know if we are getting there? For example, in the early learning collective impact work I’m doing with Montana communities, I ask: What’s a good-enough estimate of how many kids are coming to kindergarten under-prepared? How does that measure up to our hopes in our community?

The term “good-enough” is key. Many times, practitioners get frustrated by the limits of what data can tell us, and so they either jump down the rabbit hole, looking for a level of specificity that is unrealistic to the resources at hand, or they throw their hands up in the air and vow to boycott data for evermore.

There’s a great phrase to describe the limits of data: “a yardstick made of Jell-o”, which was coined by Harvard psychologist Dan Gilbert. Gilbert is describing the importance of being able to extrapolate a reasonable amount of insight from an imperfect world of data.

For instance, at the first communitywide meeting we convened to launch Montana’s early learning collective impact initiative, we created a data gallery of four “good enough” data points – one estimating the number and percentage of kids coming to kindergarten under-prepared; one describing the under-utilization of important public health services, such as home visiting, and one describing the tightening market for affordable child care. The fourth data point was a national trend, and we asked participants if it reflected what we see in Montana.

The value of the data gallery was three-fold: it helped participants see how the worlds of education, health care and child care are interrelated; it allowed people with differing levels of expertise and experience to share their perspectives; and it gave the steering group insights into potential areas of focus. In other words, we had a starting place!

This May, I’ll be traveling to Kitchener, Canada to participate in the Tamarack Institute’s Collective Impact 3.0 conference. “Collective Impact 3.0” is a reference to a paper that Mark Cabaj and Liz Weaver released earlier this year in which they seek to upgrade the five conditions of Collective Impact into a movement building paradigm.

Concerning shared measurement, Cabaj and Weaver suggest that a “data-light and learning-heavy” approach to data and evaluation can result in more effective collaborations. This has certainly been my experience, and I look forward to learning more about what’s working in other communities as we continue to evolve our understanding of our approaches and impacts.