evaluating impact

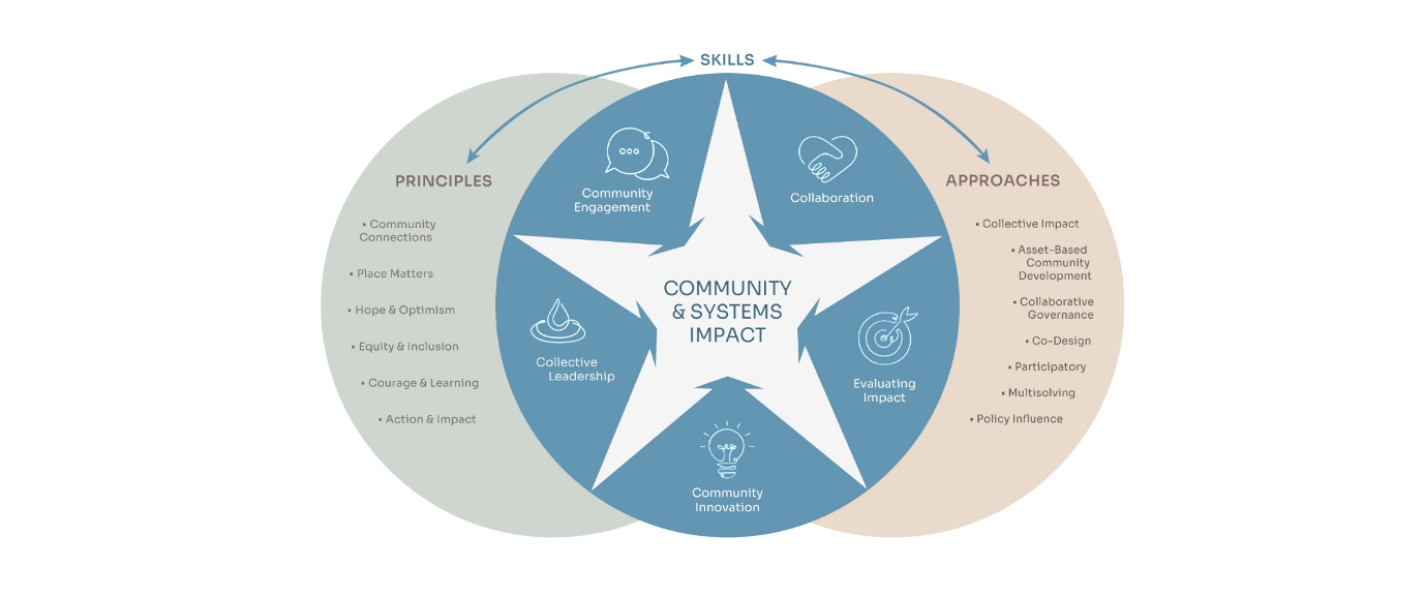

Evaluating impact is an essential skill in community change. It's necessary for understanding what works, what doesn't and for learning together to understand what needs to happen next.

We are deeply convinced that local and collaborative approaches are a crucial lever in solving complex social issues. Evidence is an important building block in constructing a strong foundation for community change, as it can contribute to strengthening participation, commitment and capacity.

With the right questions and the right set of evaluation principles, values, and tools, communities can make impressive progress on the challenges they are facing.

Article

Strengthening Evaluation Literacy

Over time reframing evaluation in our work to be more participatory and collaborative leads to higher levels of learning, strengthened community relations, and greater transparency.

Mobilizing our experience, passion, and curiosity to promote evaluation as a tool for action, impact and engagement in communities, we accompany individuals and groups in their learning processes.

getting started

Developing Evaluations That Are Used

Evaluating Collective Impact: Five Simple Rules

Shared Measurement: The Why is Clear, the How Continues to Develop

Assessing Readiness for Community-Engaged Evaluation

Collective Impact & Shared Measurement

Developing an Evaluation User Profile

Meet the director!

Dr. Chúk Odenigbo (PhD) is the Consulting Director of Impact Evaluation at the Tamarack Institute Learning Centre. Proudly Franco-Albertan, Chúk is passionate about the ways in which the environment impacts human health and the role of justice in our understanding of how our societies function. This passion manifests itself in his work where he focuses on environmental and climate justice in his efforts to not only make green spaces accessible for everyone; but to fight environmental racism in all its forms and to popularise the understanding that you cannot create a society that is environmentally friendly if that same society is not just.

Chúk has worked in a variety of industries, including oil and gas, fashion retail, and academia. He is interested in the multidisciplinary nature of our existence and in exploring new solutions that respond to recurring problems. Chúk was ranked amongst the top 30 Sustainability Leaders under 30 by Corporate Knights, the top 30 Change-Making Albertans under 30 by the Alberta Council for Global Cooperation, the top 10 young Franco-Albertan leaders by Radio-Canada and the top 25 environmentalists under 25 in Canada by the Starfish for three years. Chúk was awarded the Eugène C.-Trottier award for his contribution in making his community more visible and more welcoming for all, and was named the 43rd Ashley Fellow at Trent University; the youngest person and first Black person to receive both of these distinctions.

Chúk's Office Hours

Wednesdays 1:00–3:00 p.m. MT

15-minute free consultations

Book a consultation with Chúk!

Current Themes Being Explored:

- Getting to practice and keeping it simple

- Supporting communities in their evaluation processes

- Participatory approaches in virtual mode

- Equity lens on evaluation and learning practice

- Transformation evaluation

- Future thinking

- System mapping

- Communicating impact (impact reports and communication strategies)

Evaluating Community Impact

Evaluating Community Impact

Community Engagement

At Tamarack, we are experimenting with new ways of measuring change, exploring who is responsible for outcomes, developing methods that can keep up with the fast-moving pace of community change activities, using alternative approaches for getting changemakers involved in the actual assessment process, and using the results to drive new thinking, better strategies and deeper impact.

upcoming events

3/5/26 1:00 PM EST @ 1:00 PM EDT

3/11/26 12:00 PM EDT @ 12 h 00 HNE

3/18/26 1:00 PM EDT @ 1:00 PM EDT

3/26/26 1:00 PM EDT @ 1:00 PM EDT

5/14/26 1:00 PM EDT @ 1:00 PM EDT

5/20/26 1:00 PM EDT @ 1:00 PM EDT

7/15/26 1:00 PM EDT @ 1:00 PM EDT

9/23/26 1:00 PM EDT @ 1:00 PM EDT

get in touch

We’re happy to answer questions, discuss community issues and direct you to trusted resources. Get in touch with a specific team member or submit a general inquiry.

stay up to date

Enter your email and choose your subscriptions.

.jpg?width=3000&height=1854&name=Community-Engaged%20Evaluation%20Paper%20(1).jpg)