Building an evaluation agenda for the Nonprofit SectorThis post was originally published on the AEA365 blog, to read the original post please click here.

Hello, we are Andrew Taylor, from Taylor Newberry Consulting, and Ben Liadsky, from the Ontario Nonprofit Network (ONN). ONN is a provincial network that works to promote a healthy nonprofit sector by analyzing and interpreting trends in the sector, provincial legislation and policy. Today, we want to share with you our work to develop a Sector Driven Evaluation Strategy to empower Ontario nonprofits to become more actively involved in setting the evaluation agenda.

Ontario nonprofit leaders are under a lot of pressure to evaluate their work. Although they appreciate the potential of evaluation, they often tell us that they find the process frustrating and stressful in practice. We set out to try to understand this frustration by speaking with nonprofits, funders, government reps, and evaluators from around the province. It turns out that the reasons are actually pretty clear and consistent with what research on evaluation utilization would predict. When nonprofits get frustrated with evaluation, it is because they haven’t had input into the design, the questions aren’t meaningful to them, communication is insufficient or vague, and they don’t know who will use the findings or whether they will even get read.

Interestingly, nonprofits don’t always find evaluation frustrating. The problem seems to come up mostly when the evaluation is required by a funder as part of accountability for grant money. When evaluation work happens outside of this context — for example, when a funder and grant recipient have an ongoing relationship wherein they share responsibility for evaluation and give each other permission to make mistakes or when evaluation is undertaken collaboratively by a network of organizations that have no accountability power over one another — it tends to go pretty well.

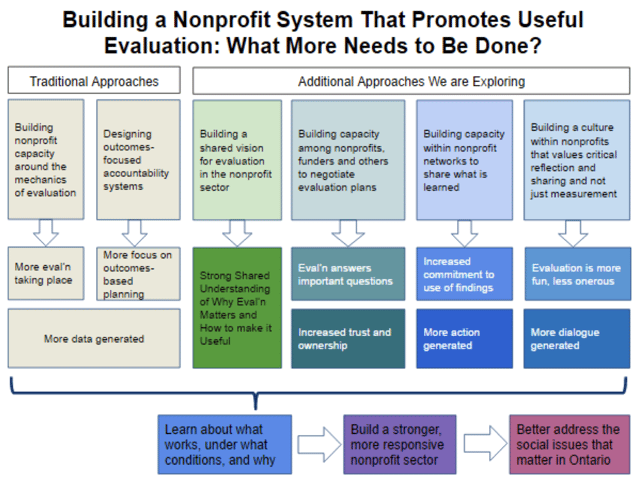

Lesson Learned: Efforts to improve evaluation often begin with the assumption that the problem is lack of skill, resources, or interest within nonprofits. As such, capacity building focuses on the mechanics of how to do evaluation. Our findings suggest that the problem may have more to do with the fact that evaluation is often undertaken for poorly articulated reasons, in the context of relationships that are not based on trust, with insufficient attention to alignment between methodologies, approaches, and intended uses.

Rad Resources: As we move into the second phase of our project, we will develop resources to help nonprofits become more strategic in their relationship to evaluation work and better positioned to negotiate evaluation agreements that are likely to produce useful results. The emerging logic model below lists some of the strategies we are considering.

Check out more and read our full report by visiting our website.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please visit the aea365 webpage and contribute to the conversation so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org

aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.